How to scale Laravel: beyond the basics (Advanced Laravel Scaling)

In this post we’ll be talking about some lesser known methods that helped me scale my Laravel apps to millions of users. I’ve got 5 great tips for you, so let's dive in.

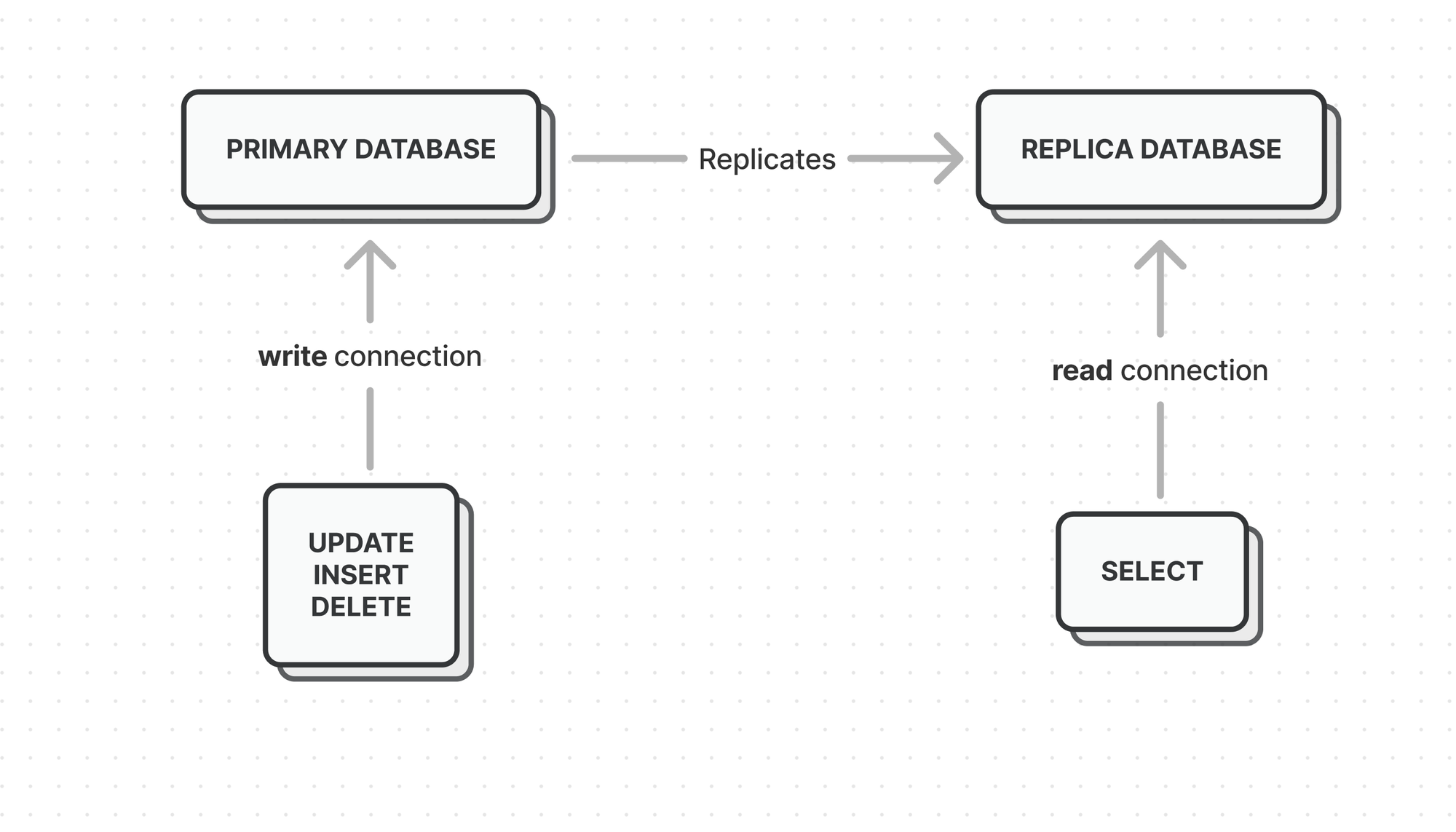

1. Database read/write connection

An effective strategy to manage the load of your database is to separate your read and write operations into different database connections. This approach can significantly improve performance and helps ensure your application remains responsive under heavy load.

Read/Write splitting allows you to direct read operations (like SELECT queries) to a replica database, while write operations (like INSERT, UPDATE, DELETE) go to the primary database. This helps distributing the load more evenly and preventing bottlenecks.

In our multi-tenant food ordering app, we have a single database for all tenants. Friday evenings are the busiest period on the platform, with over 40 orders per second at the peak.

Having a read-replica ensures that our merchants aren’t affected by all these write operations when they are looking up the orders they have to prepare.

Configuring Laravel to use multiple connections is pretty easy:

You’ll need to define your read and write connections in the connections array of your config/database.php file. For instance:

'mysql' => [

'read' => [

'host' => [

'192.168.1.1',

'196.168.1.2',

],

],

'write' => [

'host' => [

'192.168.1.3',

],

],

'sticky' => true,

'driver' => 'mysql',

'database' => env('DB_DATABASE', 'forge'),

'username' => env('DB_USERNAME', 'forge'),

'password' => env('DB_PASSWORD', ''),

'unix_socket' => env('DB_SOCKET', ''),

'charset' => 'utf8mb4',

'collation' => 'utf8mb4_unicode_ci',

'prefix' => '',

'strict' => true,

'engine' => null,

],The sticky option ensures that read operations immediately follow writes on the same connection, because there may be some delay before the read replica is up-to-date.

To set up a read replica on our multi-tenant food ordering app, we used AWS Database Migration Service. This service allowed us to set up a read replica without any downtime, because 10 years ago when I set up the database, I didn’t know any better. If I were to start a new project today, I’d set up a read-replica from the beginning, saving me a lot of hassle down the line.

2. Batch inserts/updates

Instead of executing multiple individual queries, batch operations allow you to insert multiple records in a single query, reducing database overhead and speeding up your application. Let’s take this code for example.

<?php

$cart = [

[

'product' => 'Apple',

'amount' => 3,

],

[

'product' => 'Banana',

'amount' => 2,

],

[

'product' => 'Cherry',

'amount' => 4,

],

[

'product' => 'Pear',

'amount' => 5,

]

];

$order = \App\Models\Order::create([

'name' => 'Order at ' . date('Y-m-d H:i:s'),

]);

$cart = collect($cart)->map(function($item) use ($order) {

$item['order_id'] = $order->id;

return $item;

});

DB::table('line_items')->insert($cart->toArray());Here we have a fictional cart, with multiple line items. We want to convert this cart into an order with associated line items. Your first instinct may be to loop over the cart and create a LineItem model one by one, but this will execute multiple queries.

We can make this much more efficient, by using the Query Builder directly as follows:

DB::table('line_items')->insert($cart->toArray());

This way, we will only execute a single query, no matter how many line items there are in your cart.

This method comes with the drawback of bypassing every model event, because you interact directly with the database. This also means you won’t get free functionality like automatic timestamps, so plan accordingly!

3. Full page cache

By caching the entire HTML output of a page, Laravel can bypass the complex process of generating views, querying databases, and executing business logic for each request.

Implementing full page cache in Laravel involves middleware that checks for the cached version of a page before proceeding to the controller. If a cached version is found, it’s served immediately; otherwise, the request is processed normally and the output is cached for future use.

In our food ordering web app we put all of our menu pages in a full page cache for 30 seconds. Now this may sound like a short time, but at peak times we get hundreds of visitors per second, so this cache drastically reduces load on the server.

Lucky for us, implementing this is pretty easy thanks to https://github.com/spatie/laravel-responsecache of Spatie.

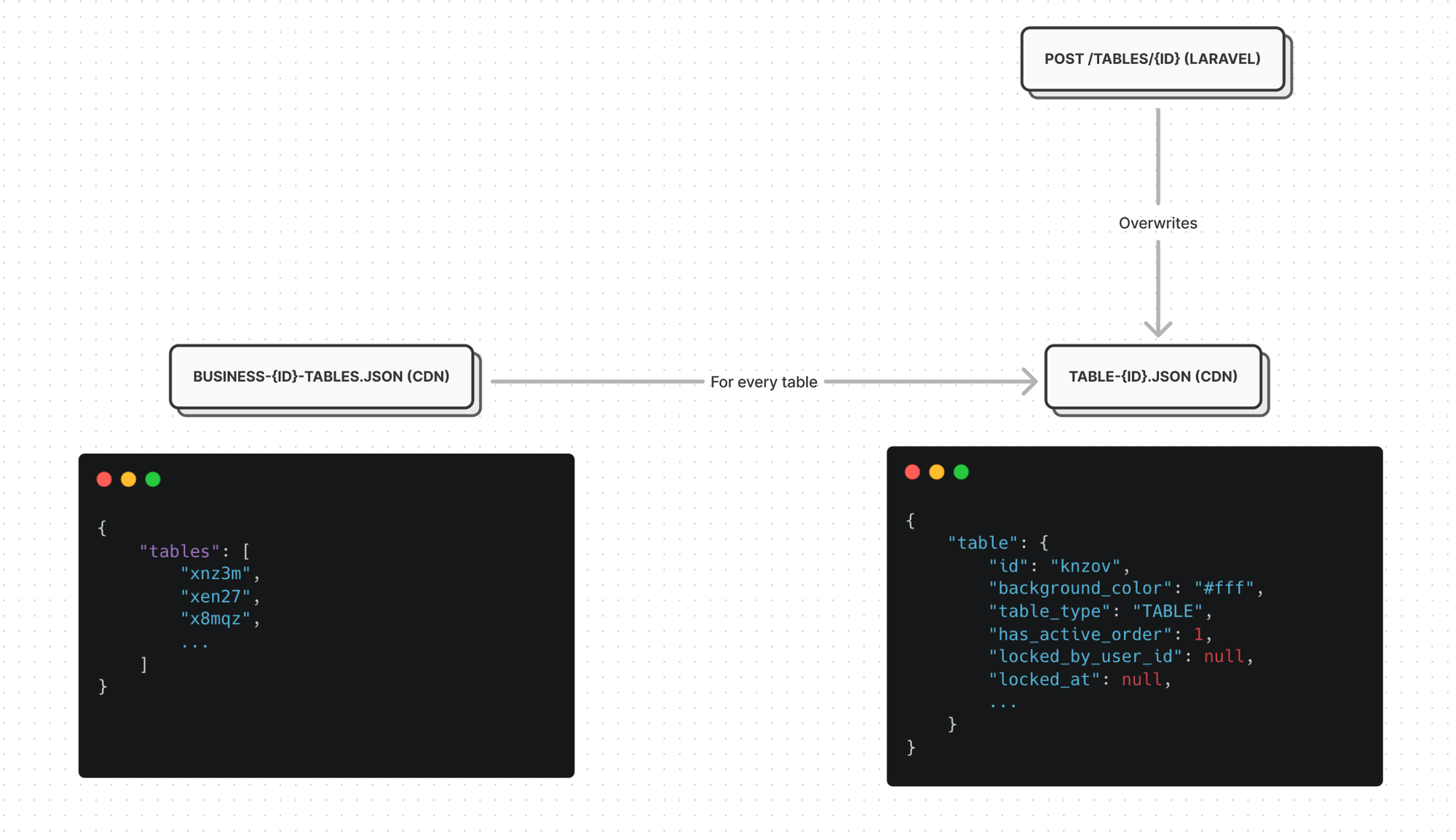

4. Use precomputed JSON files on a CDN

By storing precomputed JSON files on a CDN, for example Amazon S3 combined with CloudFront, you ensure that the data can be requested multiple times without hitting your server. This way you reduce the workload on your Laravel application server (and potentially your database server).

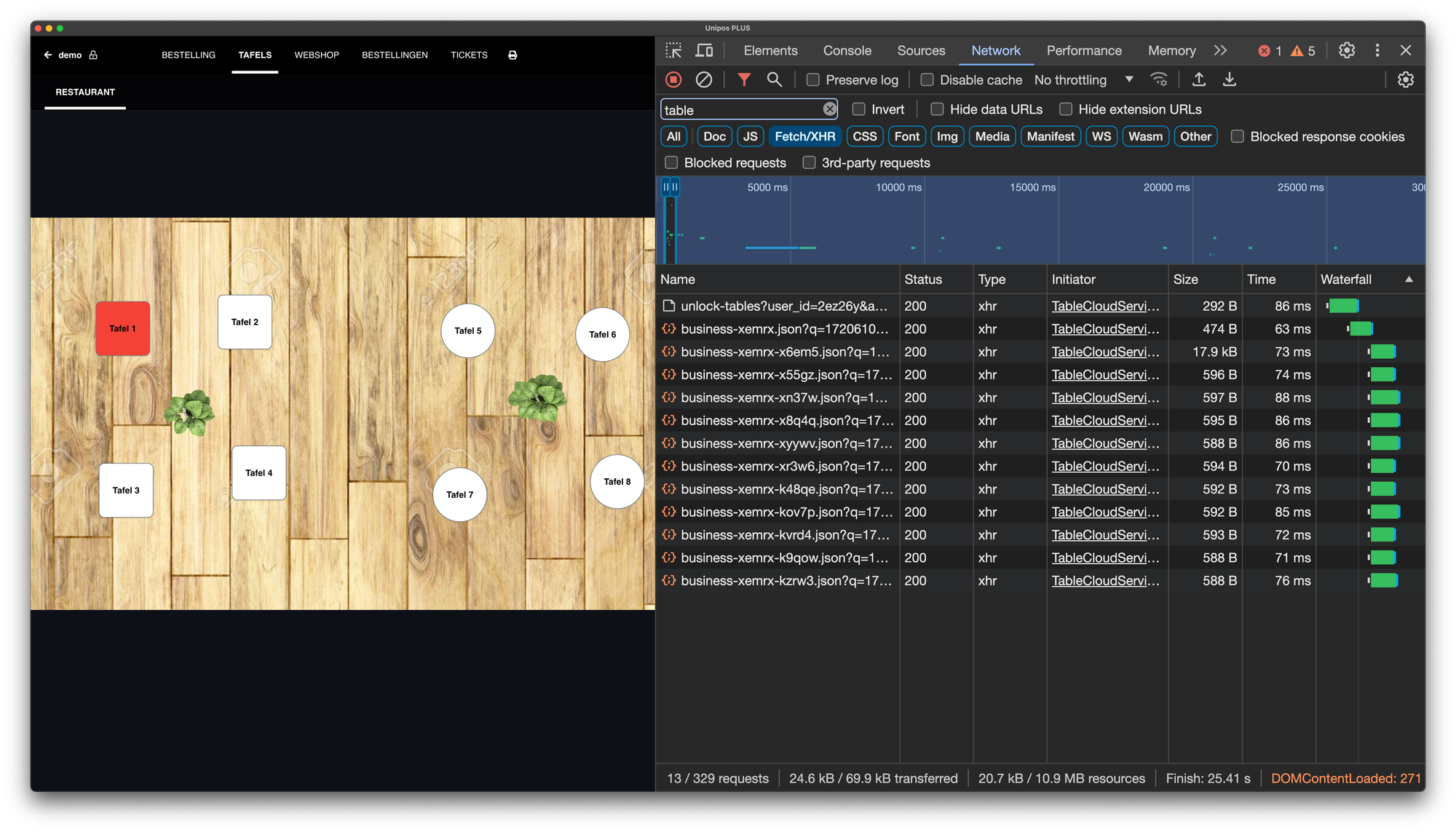

Let’s take a real-world example as a case-study. I developed a POS application that can be used in a restaurant by multiple waiters on multiple devices. In the POS application we have a tableplan that shows tables with an ongoing order in red and others in white. When a table is opened by a waiter, their name also appears on the table and the table is locked for others.

Instead of having an endpoint that returns the entire tableplan with all the details of all the tables, we opted for precalculated JSON files on a CDN.

In the background, our POS app is downloading the following JSON files:

Downloading these files is blazing fast, because they are compact and downloaded in parallel. In addition to that, Cloudfront serves them from the most optimal edge location for the user, reducing the latency even further.

All these JSON files are precalculated by our Laravel application and are kept up-to-date in a very optimal way. When a waiter opens a table we send a request to our Laravel app, and our Laravel app only overwrite the JSON file of that specific table. Finally we send a websocket event to all other devices, so they can download the updated file and update their UI accordingly.

Initially we handled this in our Laravel app using Redis Cache, but this was a big waste of server resources, because even though most of the data never changes, NGINX and FPM were still handling these requests and passing them on to the Laravel app.

Offloading these precalculated JSON files to a CDN was crucial to reduce the workload of our application servers and database.

5. Don’t run synchronous reports

Merchants love to look at reports, especially at busy times. Generating reports are often a time consuming task, so we learned the hard way not to run reports during an HTTP request.

Instead, put your reporting on a queue, ideally one specifically for reporting so other aspects of your application aren’t affected by slow reports.

I’m also going to double down on the first tip: set up a read replica - don’t do reporting on your main database because you will end up with slow downs at peak times.

If you can, also try to schedule your reporting during off-peak times (eg. at night). For our system, we pre-aggregate all the data nightly in a separate table, so we can run more efficient queries.

Finally, make sure you keep an eye on your slow_query log, and optimise your queries where necessary!

And that concludes the article, I hope you learned a thing or two, and I will see you in the next one!

No spam, no sharing to third party. Only you and me.