DNS management in a multi-tenant setup

DNS management on a larger scale, for example in a multitenant application, can be a a massive pain. In this article we’ll be taking a look at how you can manage hundreds, if not thousands of custom domains for your multi-tenant application, without a headache, so let’s dive in.

Webshops

I’ve been developing a multi-tenant food ordering app for over 10 years now, and in our application merchants get a webshop where they can sell food.

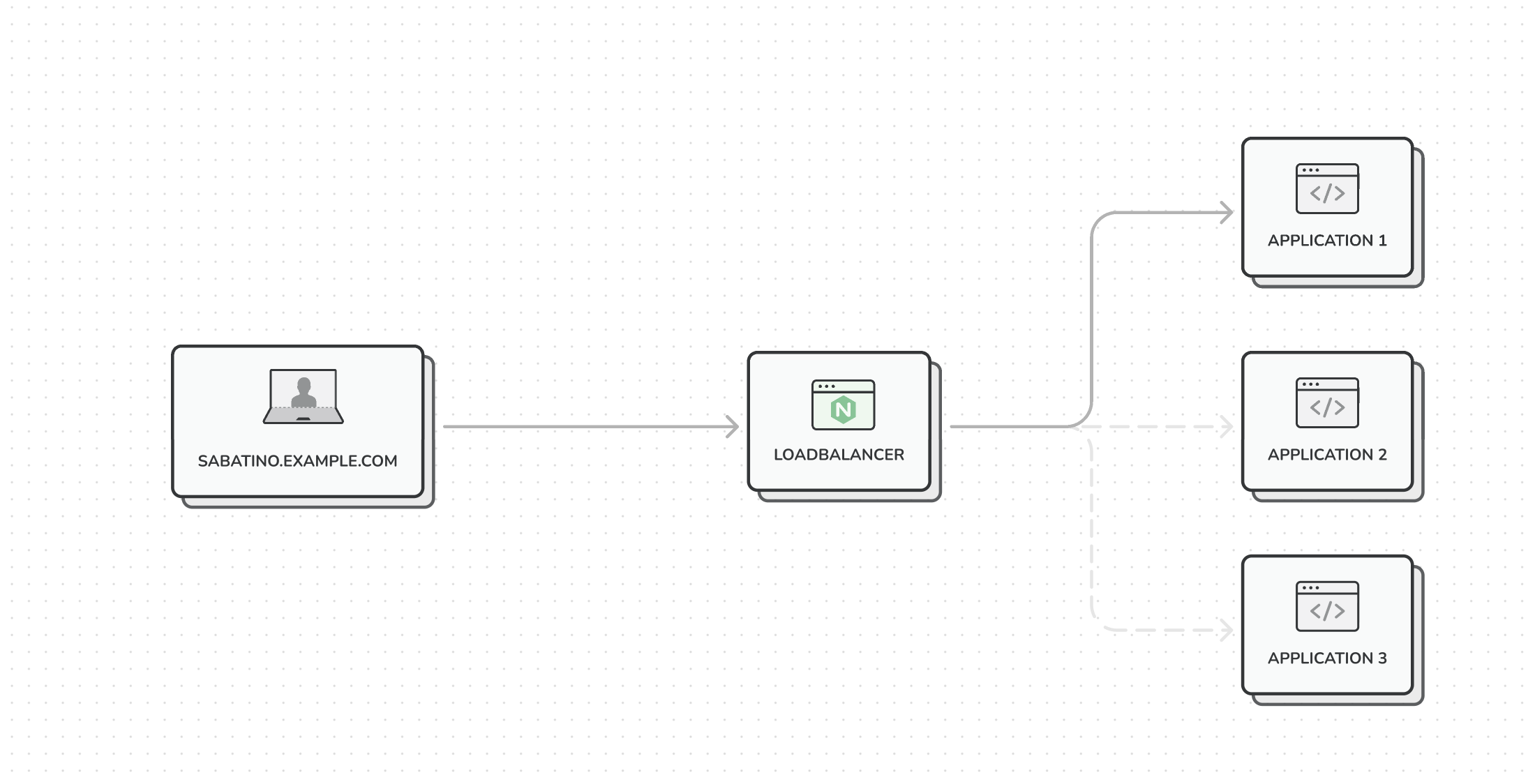

We provide the merchant with a free subdomain that automatically resolves to our application, for example sabatino.example.com

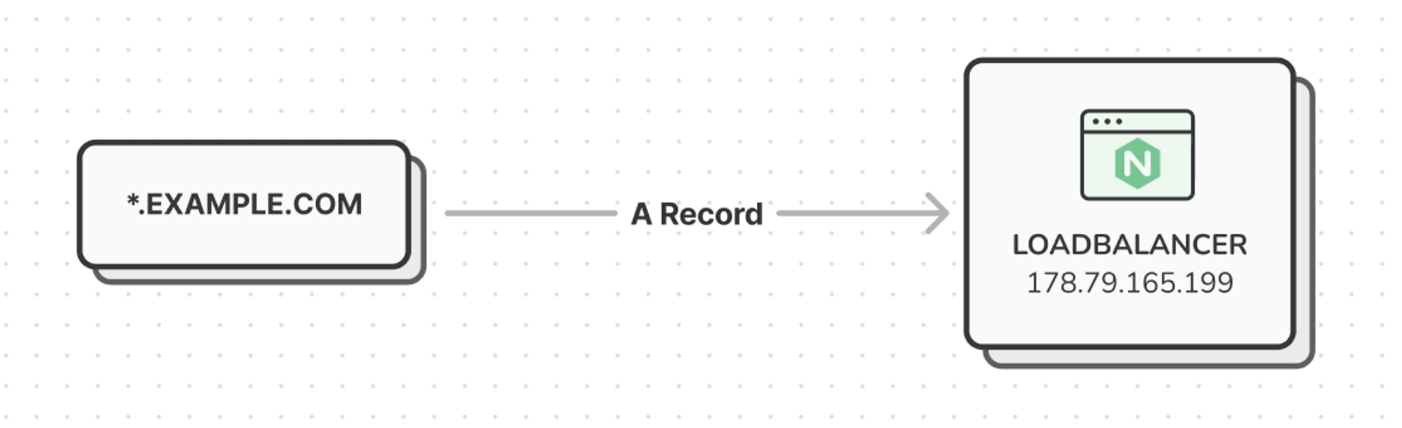

We achieve this by setting a single DNS record in CloudFlare:

A *.example.com 178.79.165.199

The A record is a wildcard record that points to our loadbalancer, and our loadbalancer then has a pool of application servers that are able to serve our Laravel app.

Our loadbalancer and application servers are powered by NGINX, and in our server block we have a wildcard virtual server, so NGINX knows how to route all incoming requests from any subdomain.

server {

listen 80;

listen 443 ssl http2;

listen [::]:443 ssl http2;

server_name .example.com; # Wildcard configuration

// ...

}When the request reaches our Laravel application, we have middleware that takes care of tenant resolving, by doing a database query to our domains table. If a tenant gets resolved successfully, we inject the tenant into the service container.

SELECT merchant_id FROM domains WHERE domain = "sabatino.example.com"For 80% of our merchants this subdomain is ideal - merchants are usually not technical and are happy to have a webshop without breaking the bank and without having to worry about IT infrastructure.

Then you have 15% of merchants that want to have a custom domain, managed by us.

And the other 5%, already have a custom domain name that they (or an IT partner) manage, and they want to see the webshop being served from their domain.

Now - let’s take a look at how we can configure these custom domains.

Routing custom domains

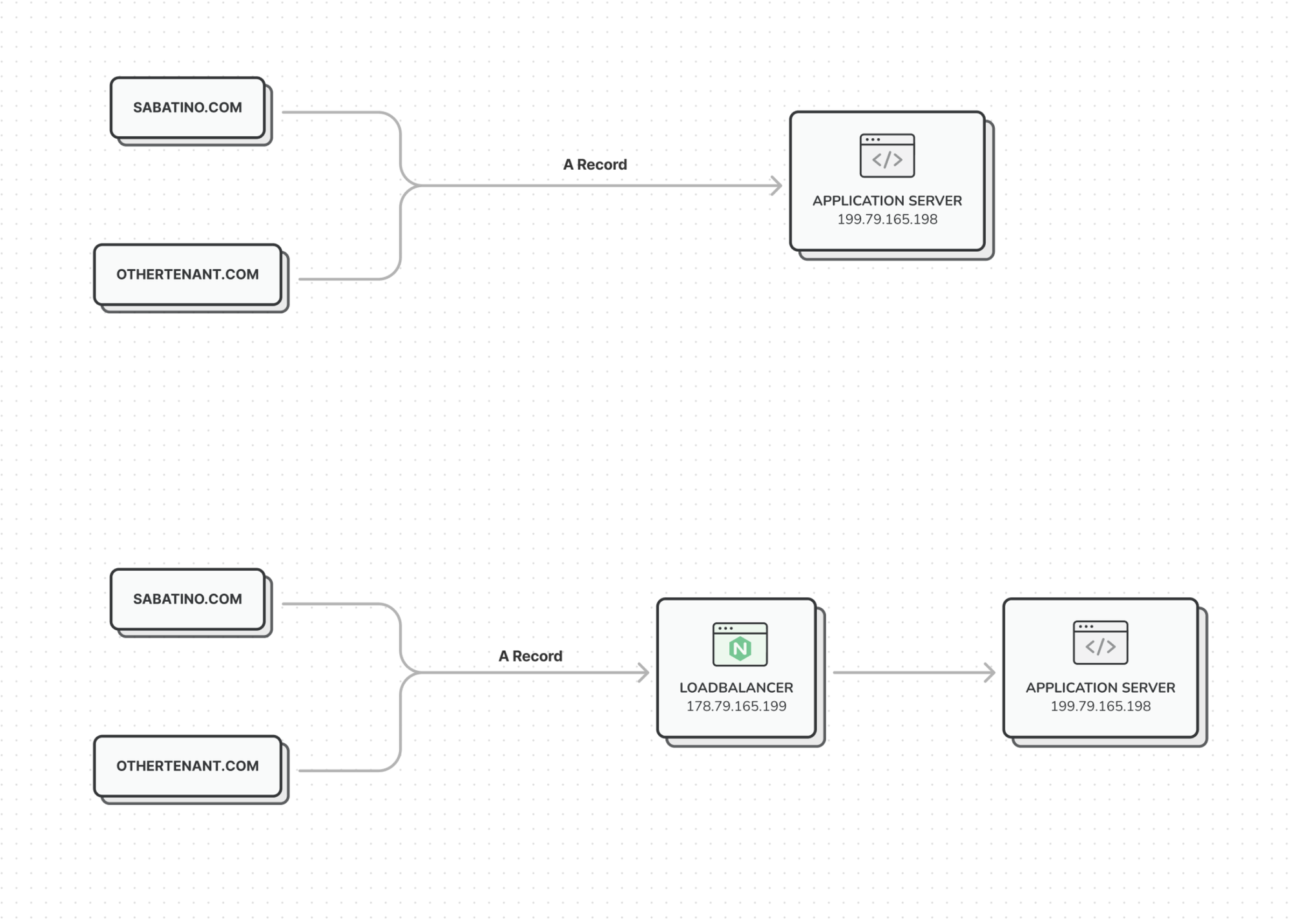

Your first instinct may be to simply create an A record where the custom domain points to the loadbalancer - like we do with our own wildcard A record. And let me tell you why this isn’t a good idea.

1) Server configuration

As we saw earlier, we configured NGINX to accept any incoming request from our subdomains. Technically, we can add as many virtual servers as we want, but this means we’re constantly updating our NGINX configuration and this increases the risk of making mistakes, and is too technical for me to delegate to my non-technical colleagues.

Ideally, we don’t want to touch the NGINX configuration when adding a new custom domain and in a perfect world we’re able to completely automate this, so we don’t have to worry about things like SSL certificates.

2) Server management

Imagine you’re managing 100+ custom domains that all point to an application server. If you were to introduce a loadbalancer in between, that means you’re now burdoned with updating 100+ DNS records, potentially causing downtime and frustrated customers. On top of that, you may even have to coordinate with IT partners, making such a migration a massive pain.

Dynamic reverse proxies

To be able to automate management of our custom domains, we started looking into OpenResty.

Openresty is an NGINX distribution that supports Lua scripting and our idea was simple: We create a dedicated server, that acted as a ‘router’, and this server ran OpenResty.

Using some LUA scripting, we could then dynamically create a server block with a proxy_pass directive, pointing to our loadbalancer.

And even though OpenResty is amazing, we found that it was a bit too low-level for our use-case, so we went looking for alternatives.

One of these alternatives is Ceryx, a programmable reverse-proxy solution, built on top of OpenResty, with a very simple design. Ceryx queries a Redis backend to decide to which target it should route each request, and on top of that it is able to provide dynamic SSL certificates for every domain that is found in the routing table.

So when a request comes in, Ceryx will first do a lookup in the Redis table to see if we have a matching record. If we do, it’ll then move on to see if it has a valid SSL certificate, and if not Ceryx will create one for us automatically.

Finally, thanks to some clever LUA scripting, Ceryx creates a dynamic server block and passes in the necessary variables to achieve the proxying.

The way you can manage Ceryx is through a super simple REST API, that allows you to create, update and delete routes.

We built an interface on top of this API so we can manage custom domains visually and this allows even my non technical colleagues to manage domains.

Reflecting on the solution

With this router in place, we have effectively decoupled our own infrastructure from the custom domains. To add a custom domain we still have to create a DNS record, but now instead of exposing the IP address of our loadbalancer, we instead expose the router.

At this point, you might ask yourself: but what’s the difference? Because if we were to change the IP address of the router, we’ll have the same issues.

Well, Ceryx is very well optimised, meaning we don’t have to run it on expensive hardware. As a matter of fact, we’re running this on one of the cheapest virtual servers available, costing us a mere $5/month. And if we take a look at the resources being used on this server, we can see that it is very under utilised, even though there are more than 100 domains being proxied from this server.

Now, if we do modifications on our core infrastructure - which is more likely to happen - for example migrate the load balancer to another host, changing its IP address in the process, we only have to change our own DNS records.

And the custom domains will keep working without any DNS changes because it proxies dynamically.

Another benefit is that if we were to scale to another region, we can simply introduce a new router that will proxy the traffic to the loadbalancer in the new region.

Finally - a word about Ceryx itself. I’ve been developing my multitenant application for over 10 years. We introduced Ceryx about 5 years ago, but if we take a look at the repository now, it doesn’t seem very actively maintained.

If I were to start over today, I’d take a look at something modern like Traefik or Elastic Load Balancer from AWS.

Thanks for sticking with me, I hope you learned a thing or 2, and I will see you in the next!